As regular readers know, I have had a team in the 48 Hour Film Project every year since its beginning in San Diego. This year we came second in the whole competition, competing against a record 64 teams. We also received the audience award for our premiere screening and best sound design. We do not have a professional team; we only do this once a year with friends and family. For example, our festival winning audio was operated by a 12 year old, she was an actress for us in a previous years (thank you, Julianna.) The one exception is Jake Segraves (you may have corresponded with him through CineForm support) who does not quite have amateur status like the rest of us, with some real world production experience. Still, Jake and I shot two camera scenes with our personally owned Canon 7Ds, with a single cheap shootgun mic on a painter's pole (attached with gaffer tape) recording to Zoom H4n I got secondhand off a Twitter friend. The only additional camera for our opening scene is a GoPro time-lapse, shot while we where setting up for the first part of the shoot. This was not a gear fest, fast and light is key for extreme filmmaking within 48 hours.

As regular readers know, I have had a team in the 48 Hour Film Project every year since its beginning in San Diego. This year we came second in the whole competition, competing against a record 64 teams. We also received the audience award for our premiere screening and best sound design. We do not have a professional team; we only do this once a year with friends and family. For example, our festival winning audio was operated by a 12 year old, she was an actress for us in a previous years (thank you, Julianna.) The one exception is Jake Segraves (you may have corresponded with him through CineForm support) who does not quite have amateur status like the rest of us, with some real world production experience. Still, Jake and I shot two camera scenes with our personally owned Canon 7Ds, with a single cheap shootgun mic on a painter's pole (attached with gaffer tape) recording to Zoom H4n I got secondhand off a Twitter friend. The only additional camera for our opening scene is a GoPro time-lapse, shot while we where setting up for the first part of the shoot. This was not a gear fest, fast and light is key for extreme filmmaking within 48 hours.

As this is a CineForm blog, we of course used our own tools and workflow throughout this process. We used four computers, two regular PCs (Jake's and my office desktops,) an i7 laptop, an older MacBook Pro (for end credit.) During the shoot day, whenever we moved location, we would quickly transfer video data, converting directly from compact flash to CineForm AVIs stored on local media storage. That data was immediately cloned onto the other desktop PC using a standard GigE network. Getting two copies fast is so important, we have had a crashed drive during a 48 hour competition before. I used GoPro-CineForm Studio to convert the JPEG photo sequence from the GoPro Hero into a 2.5K CineForm AVI, and used FirstLight to do a crop to 2.35:1 and re-frame it. By 1am Sunday morning we had completed our shoot, ingested and converted all our media. One additional step that saved time for audio sync: I used a tool to batch rename all the flash media to the time and date of the capture, rather than Canon's MVI_0005.MOV or the Zoom H4n's default naming. Now all the imported media is named 11-35-24-2011-08-06.WAV or AVI etc, very fast to find video and audio pairs with the NLE, without properly timecoded sources. Last year we used Dual Eyes to sync the audio with picture, which works great, yet you have to make a secondary intermediate file which takes a little time, we found slating for manual sync and the batch renaming to be a tad faster. This was the first time we tried slating everything, and it was certainly worth it.

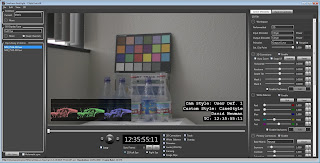

Starting at 1am Sunday, the value of FirstLight really kicked in. One of the two Canon 7Ds color temperature was way off, it seems my camera had overheated during 6 hours of operating under the San Diego sun (yet the other camera was fine -- any ideas on this readers?) The color grew worse from take to take, yet was fine at the beginning of each new setup (weird.) I had to color match the two cameras BEFORE the edit began, anything too hard to correct would be removed from edit selection (but I recovered everything.) This is where FirstLight has no competition, I color corrected footage between 1 and 4am, for every take in the movie, without rendering a single frame, without knowing which shots would make the final cut. The correction included the curve conversion for the shooting CineStyle profile to a Rec709 video gamma (Encode curve set to CStyle, Decode curve to Video Gamma 2.2), adjusting the framing for a 2.35:1 mask, images were moved up or down, others were zoomed slightly if needed (boom mic or camera gear in frame, etc) and adding some look/style to each scene. As the footage was already on the two editing desktops, we simply shared a Dropbox folder to carry all our color correction metadata. If you are not already using Dropbox with FirstLight, please learn more here http://vimeo.com/10024749. Through Dropbox the color corrections where instantly on the second desktop PC, Jake's PC, for our primary editing. The correction data for the entire project of 302 clips was only 144,952 bytes -- way less than one frame of compressed HD.

I set up the edit for base clips for the second half of the movie before crashing out for a two hour sleep. Jake arrived refreshed with more like 5-6 hours of sleep to begin the real edit -- I was secondary editor working on some early scenes (several of which didn't make the final cut.) Editing was done in Premiere Pro 5.5 using the CineForm 2D 1920x1080p 23.976 preset. We had some effects elements, so once the edit was locked for a segment, Jake saved the current project and sent that to me, and made a short segment for the effect elements, and did a project trim and sent that new small project and its trimmed media to Ernesto (our awesome lead actor and effects artist) running After Effect on my i7 laptop. The laptop was also sharing the color correct database via Dropbox. I loaded the latest full edit into my PC (relinking media to the local data,) while Ernesto was preparing the effects composition. I could now complete the color correction based on the edit Jake had competed around the effects area. Again we exclusively used FirstLight as those color corrections are automatically populating the AE comp. The trimmed media has the same global IDs as the parent clip -- why this works so well. Once the color parse was done (about 5 minutes is all I had time for with the pending submission deadline) Ernesto was done with the composition, we purged any cached frames so the latest color corrections would be used, then rendered out a new CineForm AVI for adding back to the edit.

This workflow resulted in very little data transfer and hardly any rendering for the entire project, lots of speed without quality compromise. The only other renders were tiny H264 exports emailed to our composer Marie Haddad throughout the day as the edit was locking in, as she was scoring the movie from her home. The final eight minute movie took about seven minutes to export to a thumb drive (I got a fast thumb drive, as they are normally the slowest element.) We sent a film off the finish line with 40 minutes to spare (a 30 minute drive.) We then checked our film to see what we rendered out from a second copy (we render out from both desktops at the same time.) Checking the audio levels - which were fine. If we had any audio changes we would have rendered only the audio to a wave file (only seconds) then using VirtualDub dub to replace the audio (only a 1 minute or so) -- you learn many shortcuts doing this competition for so many years. We sent a second thumb drive to the finish just in case, which was needed as the first car ran out of fuel (of all things?!) The second copy arrived with only 1 minute to spare.

Hope you enjoy our film.