If you haven’t heard already, Protune™ firmware and GoPro

App for the WiFi BacPac are now available -- get them now. While my team worked substantially more on

developing the GoPro App, this blog is about the origins and design of Protune.

Before I geek out on why and how Protune is so cool, some

readers may want to know what it is and does (and may want to skip the

rest.) Protune is a suite of features

designed to enhance an even more professional image capture from your GoPro,

while still being accessible to every GoPro user. Protune has the strongest emphasis on image

quality by increasing the data-rate (decreasing compression) from an average of

15Mb/s to 35Mb/s. Small artifacts that

can occur in detailed scenes or extreme motion are gone at 35Mb/s. Next is adding the 24p frame rate (to the

existing frame rate options), greatly easing the combination of GoPro footage

with other 24p cameras, common to professional markets. Finally the Protune

image is designed for color correction; it will start with a flatter look that

is more flexible for creative enhancement of the image in post-production. With

the latest HERO2 firmware installed, Protune is enabled with the secondary

tools menu.

Now for the why and how.

Protune has been a long time coming, and so has this blog

entry. Protune is an acknowledgement

that so many GoPro cameras are used for professional content creation –

Discovery Channel looks so much like a GoPro channel to me. Protune is also the first clear influence the

CineForm group has had on in camera features, for which we are super proud, yet

most of the engineering was done by the super smart camera imaging team at

GoPro HQ. For the novice Protune user,

CineForm Studio 1.3 is setup to handle Protune image development, so all users

can benefit from this cool new shooting mode. This synergy between the software

and camera groups, allows us to push both further. In the old CineForm days

(non-GoPro) I would have probably blogged about helping with the design of a

new camera log curve, and all the pluses and minus of color tuning, months

before we would have had anything to show, but that was before we became part

of a consumer electronics company. Some

things must remain secret. Working at CineForm was exciting, but it is nothing

compared to the adventures I’ve already had at GoPro, with so much more to

come.

Protune for me started when HERO2 launched. Here was a camera that I could use in so many

ways, yet in certain higher dynamic range scenarios (I shoot a lot of live

theatre and was experimenting with placing GoPros around the stage), the

naturally punchy image limited the amount of footage I could intercut with

other cameras. It is of course the

intercutting of multiple camera types that is of greatest need for the

professional user. Note: there is one professional group I know of that

exclusively uses GoPro HEROs, and that is our own media team – even though they

now use Protune shooting modes. Protune gets you more dynamic range, and I was

amazed how much.

Sensor technology continues to grow, and we are seeing

awesome wide dynamic range images coming from premium cameras like ARRI Alexa

and even the amazingly affordable Blackmagic Cinema Camera, but as sensor size

(really pixel size) shrinks, there is an impact on dynamic range. Smaller pixels often result in reduced

dynamic range, yet so much has changed in so few years. Back in 2006, CineForm was very much involved

with Silicon Imaging and the development of the SI-2K camera, which was highly praised

and generally confirmed to have around 11 stops of dynamic range – good enough

to be used on the first digitally acquired feature (well, mostly digital) to

win Oscar Cinematography and Best Picture awards. The HERO2 sensor is smaller and has

significantly higher pixel count (11MPixel versus SI-2K’s 2MPixel, HERO2 pixels

are way smaller), yet we are also seeing a similar dynamic range.

It was not just five years of sensor technology that made

all the difference, it was using a log curve instead of contrast added to

Rec709 with 2.2 gamma -- geek speak for calibrating cameras to make the default

image look good on your TV. Making images

look great out of the box is the right thing to do for all consumer cameras,

and you get just that with HERO2 via HDMI to your TV. Yet TVs do not generally

have 11 stops of dynamic range, maybe 9 on a good set, and that is after you’ve

disabled all the crazy image “enhancements” TV defaults to having switched on

(which typically reduce dynamic range further.)

So why shoot wider dynamic range for something that may only

be seen on TV, computer monitor or smart phone (all decreasing in dynamic

range)? The question is somewhat obvious

to professional users, as color correction is part of the workflow. Color correction simply works better with more

information from the source for which to choose the output range. Even the

average consumer today is more open to color correction of an image thanks to

the likes of Instagram filters. The more dynamic range you start with, the

better such stylized looks can work. Our

own media team wasn’t using great tools like Red Giant’s Magic Bullet Looks

until shooting Protune, which greatly increased the creative flexibility of the

GoPro image output.

So why a log curve, rather than just reduced contrast with

the regular gamma? This is a trickier

question. The full dynamic range can be

presented with a 2.2 gamma of standard TV, it will look a little bland (flatter

or milkier) just as log curves do on a TV without color correction, so it holds

no aesthetic advantage over log. Log

curves do have an advantage over gamma curves when your goal is to preserve as

much of the source dynamic range for later color correction.

Some imaging basics:

Light hitting the sensor and the sensor’s response to that light, is

effectively linear (not the incorrect use of linear to describe video gamma

that still seems to be popular.) Linear has the property that as light doubles

(increasing one stop), its sensor value doubles. With an ideal 12-bit sensor, ignoring noise, there

are 4096 values of linear light. After

the first detectable level of light brings our ideal sensor from 0 to 1, a

doubling of light goes from 1 to 2, and the next stop from 2 to 4, and so on to

produce this series 1, 2, 4, 8, 16, 32, 64, 128, 256, 512, 1024, 2048 and 4095

of doubling brightness (to the point where the sensor clips.) An ideal 12-bit

sensor has a theoretical maximum of 12-stops of dynamic range. If we were storing this 12-bit data as

uncompressed, this is the most flexible data set (for color correction), yet

this would be over 1000Mbits/s compared with today’s standard mode 1080p30 mode

on HERO2 at 15Mb/s – think how fast your SD card would fill, if it could actually

support that fire hose of data. Fortunately it turns out that linear is a very

inefficient way of presenting light when humans are involved, as we see brightness

changes logarithmically--a stop change is the same level of brightness change to

us, whether it is from linear levels 1 to 2 or from 1024 to 2048. As a result, most cameras map their sensor’s

12, 14, 16-bit linear image, to an 8, 10 or 12-bit output with a log or gamma

curve, exploiting that we humans will not notice. Even the uncompressed mode of the new Blackmagic

camera maps its 16-bit linear output and only stores 12-bit with a curve – this

is not lossless, but you will not miss it either. Lossless versus lossy is an

argument you might have heard me present before, to the same conclusions.

If we remained in linear, converting to 8-bit from 12-bit would

truncate the bottom 4 stops of shadows detail, we will notice that. So a conventional 2.2 gamma curve does the

following with its mapping (top 5 stops shown.)

12-bit Linear input

|

8-bit Gamma 2.2 output

|

Codes per stop

|

256

|

73

|

19

|

512

|

100

|

27

|

1024

|

137

|

37

|

2048

|

187

|

50

|

4095

|

255

|

88

|

So gamma curves don’t fully embrace a human visual model,

with many more codes used in the brightest stop as compared with the darker

stops. The perfect scenario might be to

have the 256 codes divided amongst the usable stops, e.g. 11 stops would be

around 23 codes per stop. Remember, this

is for an ideal sensor (i.e. noise free) and this is not going to happen. The darkest usable stop is mostly noise,

whereas the brightest stop is mostly signal, we need a curve to handle the

allocation of our code words with this in mind.

The top 5-stops of the Protune log curve:

12-bit Linear input (idealized)

|

8-bit Protune output

|

Codes per stop

|

256

|

112

|

33

|

512

|

146

|

34

|

1024

|

181

|

35

|

2048

|

218

|

37

|

4095

|

255

|

37

|

While the darkest useable stop have a similar number of code

words as the gamma curve, Protune distributes the codes are more evenly over

the remaining stops, more code-words are reserved for shadow and mid-tone

information.

While I glossed over this before, again why not just have 23

code words per stop? This has to do with

compression and noise. Noise is not

compressible, at least without it looking substantially different than this

input, and the compressor, H.264, CineForm or any other codec, can’t know

signal from noise. So if too many code

words represent noise, quality or data-rate has to give. The Protune curve shown above will produce

smaller files, and generally be more color correctable than using fixed code

words per stop. We have determined the best curve to preserve dynamic range

without wasting too much data to preserve noise.

Side note for other RAW cameras: We have extended our

knowledge gained while developing to the Protune curve to calculating of the

best log curve for a particular dynamic range. This feature has now been

included in the commercial version of CineForm Studio (Windows versions of Premium

and Professional), so that the RAW camera shooter, such as from Canon CR2

time-lapse videography, to Blackmagic CinemaDNG files, can optimize the log

encoding of their footage. Of course

transcoding to CineForm RAW at 12-bit rather than 8-bit H.264 helps greatly,

yet the same evening out of the code-words per stop to applies as it does in

the HERO2 camera running Protune.

Protune couldn’t exist as just a log curve applied upon the

existing HERO2 image processing pipeline, we had to increase the bit-rate so

that all the details of the wider dynamic range image could be preserved. But

we didn’t stop there. As we tuned the

bit-rate, we also tweaked the noise reduction and sharpening, turning both down

so that much more natural detail is preserved before compression is applied (at

a higher data rate required to support more detail.) Automatically determining

what is detail and what is noise, is a very difficult problem, so delaying more

of these decisions into post allows the user to select the level noise

reduction and sharpening appropriate to their production. I personally do not apply post noise

reduction, happy working Protune as it comes from the camera, adding sharpening

to taste.

The CineForm connection:

35Mb/s H.264 H264 is hard to decode, much harder than 15Mb/s. So

transcoding to a faster editing format certainly helps, and that comes for free

with GoPro CineForm Studio software. Also,

the new Protune GoPro clips carry metadata that CineForm Studio detects and

automatically develops to look more like a stock GoPro mode, cool-looking and

ready for show. All these changes are

stored as CineForm Activate Metadata, are non-destructive and reversible, all

controlled with the free CineForm Studio software. GoPro is working to get professional features

in the hands of the everyday shooter, and the CineForm codec and software is an

increasing part of that solution.

There is so much to this story, but I’m sure I’ve gone on

too long already. Thank you for reading.

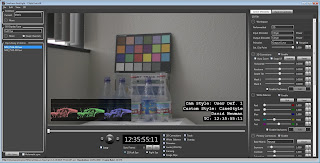

P.S. Sorry for the lack of sample images, Protune launched while I'm on vacation, my internal connection is way limited at the moment.

---

Added sample images in the next blog Why I Shoot Protune -- Always!

As regular readers know, I have had a team in the 48 Hour Film Project every year since its beginning in San Diego. This year we came second in the whole competition, competing against a record 64 teams. We also received the audience award for our premiere screening and best sound design. We do not have a professional team; we only do this once a year with friends and family. For example, our festival winning audio was operated by a 12 year old, she was an actress for us in a previous years (thank you, Julianna.) The one exception is Jake Segraves (you may have corresponded with him through CineForm support) who does not quite have amateur status like the rest of us, with some real world production experience. Still, Jake and I shot two camera scenes with our personally owned Canon 7Ds, with a single cheap shootgun mic on a painter's pole (attached with gaffer tape) recording to Zoom H4n I got secondhand off a Twitter friend. The only additional camera for our opening scene is a GoPro time-lapse, shot while we where setting up for the first part of the shoot. This was not a gear fest, fast and light is key for extreme filmmaking within 48 hours.

As regular readers know, I have had a team in the 48 Hour Film Project every year since its beginning in San Diego. This year we came second in the whole competition, competing against a record 64 teams. We also received the audience award for our premiere screening and best sound design. We do not have a professional team; we only do this once a year with friends and family. For example, our festival winning audio was operated by a 12 year old, she was an actress for us in a previous years (thank you, Julianna.) The one exception is Jake Segraves (you may have corresponded with him through CineForm support) who does not quite have amateur status like the rest of us, with some real world production experience. Still, Jake and I shot two camera scenes with our personally owned Canon 7Ds, with a single cheap shootgun mic on a painter's pole (attached with gaffer tape) recording to Zoom H4n I got secondhand off a Twitter friend. The only additional camera for our opening scene is a GoPro time-lapse, shot while we where setting up for the first part of the shoot. This was not a gear fest, fast and light is key for extreme filmmaking within 48 hours.